Understand What Cache Is and How to Clear Cache on Popular Browsers

- Published on

- What is Cache?

- Types of Cache

- Browser Cache

- Application Cache

- Server Cache

- Operating System Cache

- Hardware Cache

- Benefits of Cache

- Speeds Up Data Access

- Reduces System Load

- Saves Bandwidth

- Improves User Experience

- Increases Hardware Processing Efficiency

- How Cache Works

- Cache Hit and Cache Miss

- Storing Data in Cache

- Cache Write Policies

- Multilevel Cache

- Algorithms Used in Cache Memory

- Least Recently Used (LRU)

- First In, First Out (FIFO)

- Most Recently Used (MRU)

- Least Frequently Used (LFU)

- Random Replacement (RR)

- Comparison of Cache Algorithms

- How to Increase Cache Memory

- Increase Cache Memory (RAM) Capacity

- Proper Cache Layer Configuration

- Use Distributed Cache Memory

- Optimize Cache Configuration

- Enhance Hardware and Software Configuration

- How to Clear Cache Memory on Different Browsers

- Google Chrome

- Mozilla Firefox

- Microsoft Edge

- Safari

- Opera

- Key Cache-Related Terms You Should Know

- Cache Hit and Cache Miss

- Cache Eviction

- Cache Latency

- Write Through and Write Back

- Cache Coherence

- Cache Poisoning

- Cache Warmup

- Cache Invalidation

- Conclusion

What is Cache?

Cache (also known as a cache memory) is a solution for temporarily storing frequently used data to optimize access and processing performance. When you visit a website or use an application, important data such as images, CSS files, JavaScript, or even results of complex calculations can be stored in the cache. This allows the system to reuse the stored data instead of re-downloading or re-processing it, reducing waiting time and significantly increasing performance.

For example, when you first visit a website, the browser will download all data from the server. However, on subsequent visits, data stored in the cache will be used, making the website load faster without needing to reload everything. This is why cache plays a crucial role in improving user experience and optimizing system resources.

Types of Cache

Cache memory is divided into several different types, each serving a specific purpose in storing and processing data. Depending on the operating environment, these types of cache will optimize performance at various levels, from individual users to the entire server system and hardware devices.

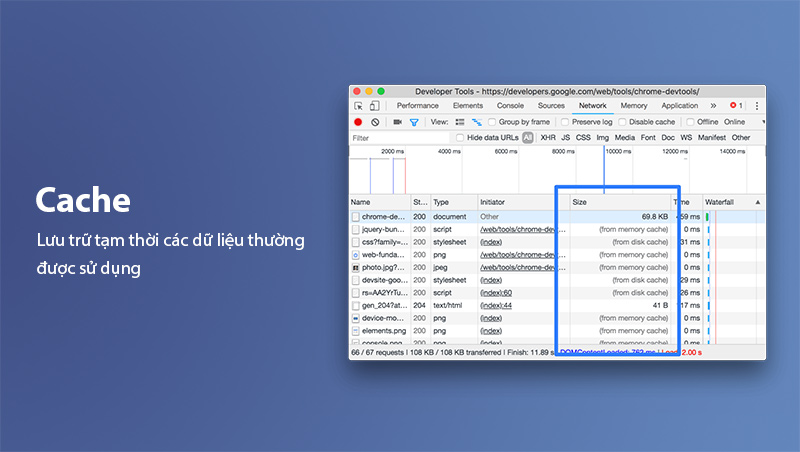

Browser Cache

This is the most common type of cache that most people use without realizing it. The browser cache stores website files such as images, videos, CSS files, JavaScript, and other interface components. When you revisit a website you’ve visited before, the browser will fetch data from the cache instead of reloading it from the server. This reduces page load times and saves bandwidth, especially important for websites with large content.

For example, when you reopen a news website, the logo and overall layout typically appear immediately thanks to data stored in the cache. This not only speeds up the loading but also reduces the load on the website’s server.

Application Cache

Application Cache is used in web or mobile applications to store temporary data related to users or repeated actions. For instance, an e-commerce app might store the list of products you’ve viewed or your shopping cart even without an internet connection.

This type of cache is particularly useful in enhancing the user experience, as the app can still operate smoothly even in unstable network conditions.

Server Cache

This type of cache is used on the server side and serves to store frequently queried results or data that is requested repeatedly. Popular tools like Redis or Memcached are often used to implement server cache, helping reduce the load on databases and speed up system response times.

For example, on an e-commerce website, the list of best-selling products or frequently queried content will be stored in server cache, making data retrieval faster rather than having to process it from scratch.

Operating System Cache

The operating system also uses cache to store frequently used files, applications, or processes. This helps speed up system execution. For example, when you open a file or application for the first time, the system will store information in the cache, and when reopening it, load times will be significantly faster.

Hardware Cache

This type of cache is directly integrated into hardware components, such as cache memory in the CPU, GPU, or SSD. Hardware cache helps speed up data processing by storing instructions or data that the system predicts will be used soon.

For example, L1 or L2 cache on a CPU helps enhance the performance of computing tasks, especially for complex processes or tasks that involve handling large volumes of data in a short time.

Using cache effectively not only improves overall performance but also reduces system load, which is particularly important in applications with high traffic.

Benefits of Cache

Using cache not only improves speed and performance but also brings many practical benefits for both end users and system developers. Here are the key benefits that cache provides:

Speeds Up Data Access

Cache stores frequently accessed data, minimizing the time spent loading data from slower sources like disks or databases. This is particularly important for websites or applications with high traffic, where every millisecond impacts the user experience.

For example, when accessing an e-commerce website, product images and the homepage layout are often loaded from browser cache, displaying immediately instead of waiting for the server to load them.

Reduces System Load

By storing data closer to the user (e.g., browser cache or server cache), the system reduces the number of requests it needs to handle. This not only reduces the load on the server but also helps save resources, especially for large databases.

Practical application: In complex web systems, implementing server cache with tools like Redis or Memcached helps optimize performance, especially when handling repeated read requests.

Saves Bandwidth

By reusing stored data, cache minimizes the amount of data that needs to be downloaded from the server. This not only saves bandwidth for both users and service providers but also improves the experience in areas with slow network speeds.

Improves User Experience

A website or application that loads quickly doesn’t just provide a better user experience but also positively impacts business metrics. Studies show that faster page load times can increase conversion rates, retain customers longer, and reduce bounce rates.

Notable statistic: Google has previously stated that every second of slower page load time can reduce online business revenue by 7%.

Increases Hardware Processing Efficiency

In systems using hardware cache (such as CPU or GPU), performance is enhanced by quickly storing and processing repeated tasks. This is crucial for high-performance applications such as image processing, big data, or artificial intelligence (AI).

Thanks to these benefits, cache is not just a tool for optimizing performance but also a key factor in making systems more stable and reliable. In the next section, we will take a closer look at how cache works and why it can enhance performance in such a significant way.

How Cache Works

Cache functions as an intermediary layer between the user and the main storage system, speeding up the data retrieval process by storing frequently used data. Below is the basic process of how cache operates:

Cache Hit and Cache Miss

- Cache Hit: When a data request is made to the cache and the data is already available, the system returns the data immediately from the cache without needing to access the main storage. This helps reduce latency and improve processing speed.

Example: When you reopen a previously visited webpage, images and CSS files are displayed from the browser cache.

- Cache Miss: If the requested data does not exist in the cache, the system must retrieve the data from the main storage and then store a copy of the data in the cache for future requests.

Example: When you visit a webpage for the first time, all data is loaded from the server and only stored in the cache after the page finishes loading.

Storing Data in Cache

Data in cache is typically stored based on rules or algorithms (LRU, FIFO, MRU, LFU, or RR - which will be explained in more detail in the following sections) to ensure optimal performance and resource usage. The system selects the appropriate algorithm based on the specific needs, such as web applications, software, or hardware.

Cache Write Policies

There are three common write policies when storing data in the cache:

- Write-through: Data is written simultaneously to both the cache and the main storage. This ensures high data consistency but may reduce write performance.

- Write-back: Data is initially written only to the cache and is written to the main storage when needed (or when it is evicted from the cache). This method offers higher performance but increases the risk of data loss.

- Write-around: Data is written directly to the main storage, bypassing the cache. The cache will only store the data if it is subsequently read.

Multilevel Cache

In complex systems, cache is often implemented in multiple levels (L1, L2, L3) to optimize performance.

- L1 Cache: Closest to the CPU, very fast but with limited capacity.

- L2 Cache: Larger capacity, but slightly slower.

- L3 Cache: The largest cache level, with the lowest speed compared to L1 and L2.

Thanks to this intelligent operating mechanism, cache not only speeds up data access but also optimizes resource utilization, providing significant benefits for both users and developers. In the next section, we will explore the algorithms used in cache memory to understand more about managing and optimizing storage performance.

Algorithms Used in Cache Memory

To manage and optimize the performance of cache, storage systems typically use intelligent algorithms to determine which data should be stored and when old data needs to be evicted. Below are the most common algorithms in cache memory:

Least Recently Used (LRU)

- How it works: Evicts the least recently used data to free up space for new data.

- Advantages:

- Effective in systems where old data is less likely to be accessed again.

- Simple and easy to implement with linked lists or hash tables.

- Disadvantages: Requires tracking the history of data access, leading to higher management costs in large systems.

- Applications: Commonly used in CPU cache systems, web browsers, and databases.

First In, First Out (FIFO)

- How it works: Data that was added to the cache first will be evicted first, regardless of access frequency.

- Advantages:

- Easy to implement with queue data structures.

- Suitable for systems that do not require access frequency tracking.

- Disadvantages: Inefficient when old data is frequently accessed.

- Applications: Used in sequential storage systems, where the order of data entry is crucial.

Most Recently Used (MRU)

- How it works: Evicts the most recently used data. This is the opposite of LRU and is suitable when new data is less likely to be accessed again immediately.

- Advantages: Useful for systems where old data is frequently reused.

- Disadvantages: Not ideal for high-speed systems where new data is accessed continuously.

- Applications: Used in specialized applications like transaction processing or financial systems.

Least Frequently Used (LFU)

- How it works: Evicts the least frequently accessed data from the entire history of cache operations.

- Advantages: Effective in systems where access frequency is a crucial factor.

- Disadvantages:

- High overhead due to the need to track and store access frequencies for each piece of data.

- Not flexible when "hot" data can change over time.

- Applications: Commonly applied in large database systems or cloud storage.

Random Replacement (RR)

- How it works: Evicts random data when space needs to be freed.

- Advantages: Simple and has low management overhead.

- Disadvantages: Inefficient, especially when important data is randomly chosen for eviction.

- Applications: Often used in systems with low performance requirements for cache or as a fallback for other algorithms.

Comparison of Cache Algorithms

Here’s an HTML table displaying the cache management algorithms, along with their effectiveness, management cost, and suitable applications:

| Algorithm | Effectiveness | Management Cost | Suitable Applications |

|---|---|---|---|

| LRU | High | Medium | Browser cache, CPU |

| FIFO | Medium | Low | Sequential storage systems |

| MRU | Medium | Low | Transaction processing, finance |

| LFU | High | High | Large databases, cloud storage |

| RR | Low | Low | Systems with low performance requirements |

Choosing the right algorithm depends on the characteristics and requirements of the system. In the next section, we will explore when to use cache to optimize performance for specific applications.

How to Increase Cache Memory

To optimize performance and ensure that cache operates effectively, one crucial factor is to increase cache memory. Increasing cache not only helps improve processing speed but also reduces the load on database systems or primary storage. Here are some ways to increase cache memory in different systems:

Increase Cache Memory (RAM) Capacity

For applications running on servers or end-user devices, increasing RAM capacity can help increase cache memory. A larger RAM capacity means the ability to store more data in memory, allowing the system to access data faster without waiting for read operations from hard drives or databases.

- Example: If you are deploying a web application or a database system, you can increase the RAM on the server to enhance the ability to store and process data more quickly in memory.

Proper Cache Layer Configuration

Using multiple cache layers (such as L1, L2, L3) helps increase the overall performance of the system. If a system only uses L1 cache (memory close to the CPU), adding L2 or L3 cache can help the system process data faster, especially in applications that require complex calculations.

- Example: In computers or servers, configuring L2 and L3 cache will help reduce latency when accessing data from main memory.

Use Distributed Cache Memory

In cloud environments or distributed systems, you can use distributed cache solutions like Redis or Memcached to store data in the memory of multiple servers. This not only increases cache memory but also improves the availability and scalability of the system.

- Example: Web applications can use Redis as a distributed cache solution to store user sessions and common data across multiple servers, ensuring that all requests are processed quickly.

Optimize Cache Configuration

One way to increase the efficiency of cache usage is to configure the cache memory properly, ensuring that unnecessary or outdated data is not stored. You can configure algorithms such as LRU or LFU to ensure that cache only stores important data that is frequently accessed.

- Example: In a web application, you can set time-to-live (TTL) values for cached data versions to automatically remove outdated copies.

Enhance Hardware and Software Configuration

Increasing the speed of hardware, such as using SSD (Solid-State Drive) instead of HDD (Hard Disk Drive), can help improve the speed of accessing cache memory. Additionally, optimizing the source code and software configuration helps minimize latency when storing and retrieving data from cache.

- Example: Instead of storing large data files on traditional hard drives, you can switch to using SSD for storing temporary files and reducing response times.

Increasing cache memory and optimizing cache configuration is crucial to ensuring the performance and stability of a system. However, careful consideration of scalability and system-specific requirements is necessary to choose the appropriate method. In the next section, we will dive deeper into how to clear cache memory on different browsers to resolve issues related to cached data when browsing the web.

How to Clear Cache Memory on Different Browsers

When browsing the web, you may encounter issues where you cannot view the latest version of a webpage, or you see strange errors due to outdated cache. Clearing cache memory can help resolve these issues and ensure that you always access the latest version of a webpage. Below is a step-by-step guide to clear cache memory on popular browsers.

Google Chrome

To clear cache memory in Google Chrome, follow these steps:

- Step 1: Open Google Chrome and click on the three dots icon in the upper-right corner of the browser window.

- Step 2: Select More tools > Clear browsing data.

- Step 3: In the pop-up window, select the time range for clearing cache (e.g., Last hour, Last 24 hours, All time).

- Step 4: Check the box for Cached images and files, then click Clear data.

Mozilla Firefox

To clear cache memory in Mozilla Firefox, follow these steps:

- Step 1: Open Firefox and click on the three horizontal lines icon in the upper-right corner.

- Step 2: Select Settings > Privacy & Security.

- Step 3: Under the Cookies and Site Data section, click Clear Data.

- Step 4: Check the box for Cached Web Content, then click Clear.

Microsoft Edge

To clear cache memory in Microsoft Edge, follow these steps:

- Step 1: Open Edge and click on the three dots icon in the upper-right corner.

- Step 2: Select Settings > Privacy, search, and services.

- Step 3: Under the Clear browsing data section, click Choose what to clear.

- Step 4: Check the box for Cached images and files, then click Clear now.

Safari

To clear cache memory in Safari (on macOS), follow these steps:

- Step 1: Open Safari and select Safari in the menu bar, then select Preferences.

- Step 2: Go to the Advanced tab and check the box for Show Develop menu in menu bar.

- Step 3: Go back to the menu bar, select Develop > Empty Caches.

Opera

To clear cache memory in Opera, follow these steps:

- Step 1: Open Opera and click on the three horizontal lines icon in the upper-left corner.

- Step 2: Select Settings > Privacy & Security.

- Step 3: Click Clear browsing data, then select Cached images and files and click Clear data.

Clearing cache helps resolve issues with outdated webpage versions and also protects personal data and enhances security while browsing. Regularly clearing cache is also a good way to maintain smooth browser operation, preventing the browser from becoming overloaded with unnecessary data.

Key Cache-Related Terms You Should Know

When working with cache, there are several important terms and concepts that you should understand in order to optimize performance and effectively manage cache memory. Below are the common terms related to cache that you will encounter in systems and applications.

Cache Hit and Cache Miss

- Cache Hit: When the system finds the requested data in the cache, the process is called a cache hit. This saves time and resources because the data is already in memory.

- Cache Miss: When the data is not available in the cache and needs to be retrieved from the primary storage or an external system, the process is called a cache miss. Cache misses typically increase latency and require more resources.

Cache Eviction

Cache eviction is the process of removing old or unnecessary data from the cache to free up space for new data. Algorithms such as LRU (Least Recently Used), LFU (Least Frequently Used), and FIFO (First In, First Out) are commonly used to determine which data should be removed when the cache is full.

Cache Latency

Cache latency is the time it takes to retrieve data from the cache. Low cache latency is one of the key factors in improving system performance, especially in applications requiring high speed.

Write Through and Write Back

- Write Through: In a write through strategy, any changes to data in the cache are immediately written to the primary storage. This ensures that the data in the cache and the primary storage are always synchronized.

- Write Back: In contrast, in write back, changes are only written to primary storage when the data is evicted from the cache. This reduces the number of writes to the primary storage but can lead to data inconsistency between the cache and primary storage.

Cache Coherence

Cache coherence refers to the consistency of data across multiple cache memories in distributed systems. In multi-processor or distributed server systems, there can be multiple copies of a single piece of data in different cache memories, and ensuring consistency among these copies is crucial.

Cache Poisoning

Cache poisoning is a security attack where an attacker tries to inject incorrect data into the cache, causing performance or security issues. Systems must have mechanisms to verify data in the cache and ensure that only valid data is stored.

Cache Warmup

Cache warmup is the process of preparing the cache before it is actually used. In large systems, when an application restarts, the cache may be empty. Cache warmup helps reduce load time and optimize performance when the cache is first used.

Cache Invalidation

Cache invalidation is the process of making data in the cache invalid. This typically occurs when data in the primary storage is changed or updated. The cached copies must be removed or refreshed to ensure data accuracy.

Conclusion

In this article, we have explored cache – memory storage that plays a critical role in optimizing system performance for computers and web applications. Cache not only reduces latency but also saves system resources by temporarily storing frequently requested data. Proper use of cache can lead to significant performance improvements, especially for systems and applications that require fast processing, such as web servers, databases, and mobile applications.

However, to achieve optimal results from cache, it’s important to understand cache algorithms, operational mechanisms, and strategies such as write-through, write-back. Knowing when cache is the appropriate solution and how to clear cache memory when necessary is also essential to maintaining long-term system performance.

Additionally, understanding terms like cache hit, cache miss, and cache eviction will help you build and maintain an effective cache system, avoiding issues like high latency or excessive resource consumption. Optimization strategies such as cache warmup and cache invalidation should also be applied to ensure data accuracy and cache stability.

Finally, cache is not a one-size-fits-all solution for every performance issue. Improper use of cache or poor cache management can lead to serious issues such as cache poisoning or cache inconsistency. Therefore, combining optimal strategies with regular monitoring is essential to maximize the benefits cache can provide.

We hope this article helps you better understand cache, how to use it, and how to optimize cache memory effectively to improve system performance and enhance user experience.

Latest Posts

Lesson 26. How to Use break, continue, and return in Java | Learn Java Basics

A guide on how to use break, continue, and return statements in Java to control loops and program execution flow effectively.

Lesson 25. The do-while Loop in Java | Learn Basic Java

A detailed guide on the do-while loop in Java, including syntax, usage, examples, and comparison with the while loop.

Lesson 24. How to Convert Decimal to Binary in Java | Learn Basic Java

A guide on how to convert numbers from the decimal system to the binary system in Java using different methods, with illustrative examples.

Lesson 23. How to Use the While Loop in Java | Learn Java Basics

Learn how to use the while loop in Java with syntax, real-world examples, and practical applications in Java programming.

Related Posts

Comprehensive Guide to the Best Frontend Frameworks of 2024 – Detailed Comparison

This article compiles the best frontend frameworks of 2024, including React, Angular, Vue.js, Svelte, and Solid.js. Read on to explore their pros, cons, and how to choose the right framework for your needs.

How to Check Website Speed – Top 7 Leading Tools

A detailed guide on how to check website speed and effective optimization tools to enhance user experience and improve SEO rankings.

Law Firm Website Design – SEO-Optimized Legal Consulting Websites

Discover how to design SEO-optimized law firm and legal consulting websites with professional interfaces, speed and content optimization, enhancing user experience and improving search rankings.

Top 19+ Essential Plugins Every WordPress Website Needs

Discover 19 essential plugins to optimize, secure, and enhance user experience for your WordPress website.